This was the test I most eagerly anticipated because of the lack of information on the web regarding running a Xeon-based system at a reduced memory speed. Here I am at Cisco, the company that produces one of the only blades in the industry capable of supporting both the top bin E5-2690 processorand24 DIMMs (HP and Dell can't say the same), yet I didn't know the performance impact for using all 24 DIMM slots. Sure, technically I could tell you that the E5-26xx memory bus runs at 1600MHz at two DIMMs per channel (16 DIMMs) and a slower speed at three DIMMs per channel (24 DIMMs), but how does a change in MHz on a memory bus affect the entire system? Keep reading to find out.

Speaking of memory, don't forget that this blog is just one in a series of blogs covering VDI:

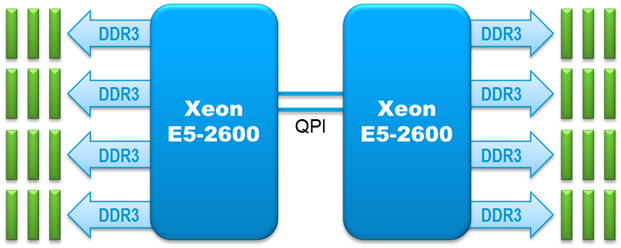

The situation.As you can see in the 2-socket block diagram below, the E5-2600 family of processors has four memory channels and supports three DIMMs per channel. For a 2-socket blade, that's 24 DIMMs. That's a lot of DIMMs. If you populate either 8 or 16 DIMMs (1 or 2 DIMMs per channel), the memory bus runs at the full 1600MHz (when using the appropriately rated DIMMs). But when you add a third DIMM to each channel (for 24 DIMMs), the bus slows down. When we performed this testing, going from 16 to 24 DIMMs slowed the entire memory bus to 1066MHz, so that's what you'll see in the results. Cisco has since qualified running the memory bus at 1333MHz in UCSM maintenance releases 2.0(5a) and 2.1(1b), so running updated UCSM firmware should yield even better results than we saw in our testing.

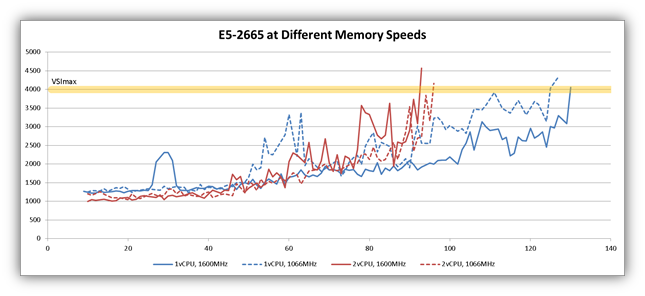

As we've done in all of our tests, we looked at two different blades with two very different processors. Let's start with the results for the E5-2665 processor. The following graph summarizes the results from four different test runs. Let's focus on the blue lines. We tested 1vCPU virtual desktops with the memory bus running at 1600MHz (the solid blue line) and 1066MHz (the dotted blue line). The test at 1600MHz achieved greater density, but only 4% greater density. That is effectively negligible considering that the load is random in these tests. LoginVSI is designed to randomize the load.

Looking at the red lines now (2vCPU desktops), we see that the system with the slower memory bus speed achieved greater density by about 3%. Is it significant that the system with theslowermemory bus achievedgreaterdensity? Nah. Again, 3% is negligible for a random workload. Both the red and blue curves show that overall density is not significantly impacted by a change in memory bus speed from 1600MHz to 1066MHz (a 33% reduction in speed).

Although the differences in overalldensitywere not significant, note that the 1vCPU test (the blue lines) showed a lowerlatencyfor the system with the faster memory bus. While overall latency of the host was not affected, individual VM performance was affected.

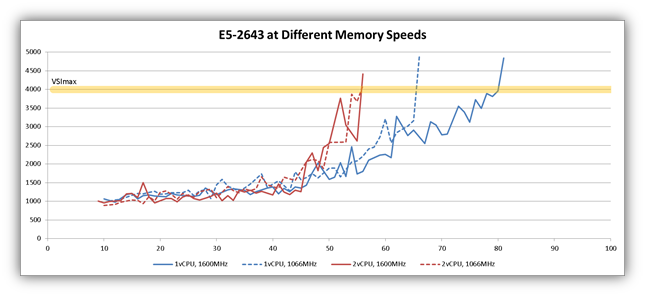

The results for the blade with the E5-2643 processors showed different results. Let's start with the red curves (2vCPU) because that's really easy to summarize. There wasnodifference in density caused by slowing the memory bus.

Looking at the blue curves (1vCPU), there is actually a dramatic difference in density of 23%. It appears that the E5-2643 (which has half as many cores as the E5-2665) is more sensitive to memory speed changes. Keep in mind that these tests are taxing the E5-2643 with a much higher vCPU/core ratio (given that it has half as many cores).

Note that again the 1vCPU test (the blue lines) showed a lower latency for the system with the faster memory bus.

What does it all mean?Going from 16 DIMMs to 24 DIMMs gives you50% more memory, yet three of the four tests showed that thedensitypenalty for running the bus at the lower speed is negligible. We did observe a higher latency in the 1vCPU tests, but that latency still fell within the acceptable range for our testing. Your parameters for success (acceptable latency) might differ, so you may need to take a closer look at this in your environment.

What does it all mean?Going from 16 DIMMs to 24 DIMMs gives you50% more memory, yet three of the four tests showed that thedensitypenalty for running the bus at the lower speed is negligible. We did observe a higher latency in the 1vCPU tests, but that latency still fell within the acceptable range for our testing. Your parameters for success (acceptable latency) might differ, so you may need to take a closer look at this in your environment.

If you need more memory, you are in luck. In deployments that require more memory per virtual desktop (2GB to 4GB), it is better to increase the physical memory of the system, even at the cost of running the memory at a lower speed. Depending on your latency requirements, adding 50% more memory might not give you 50% more desktops, but it will give you more desktops and could save you from having to add another node to your cluster.

These results are particularly satisfying to me and makes the B200 M3 a really stellar blade for VDI as compared to what the competition can offer.

What's next?Shawn will cover the effect that memory density has on VDI scalability in the next installment of the series. If you've been enjoying our blog series, please join us for a free webinar discussing the VDI Missing Questions, with Shawn, Jason and Doron! Access the webinar here.

Tags chauds:

UCS

La virtualisation

VMware

Virtual Desktop Infrastructure (VDI)

citrix

vxi

virtual desktop

cpu

Tags chauds:

UCS

La virtualisation

VMware

Virtual Desktop Infrastructure (VDI)

citrix

vxi

virtual desktop

cpu