OpenAI

OpenAI When OpenAI released GPT-4 back in March, one of its biggest advantages was its multimodal capabilities, which would allow ChatGPT to accept image inputs. However, the multimodal capability wasn't ready to be deployed -- until now.

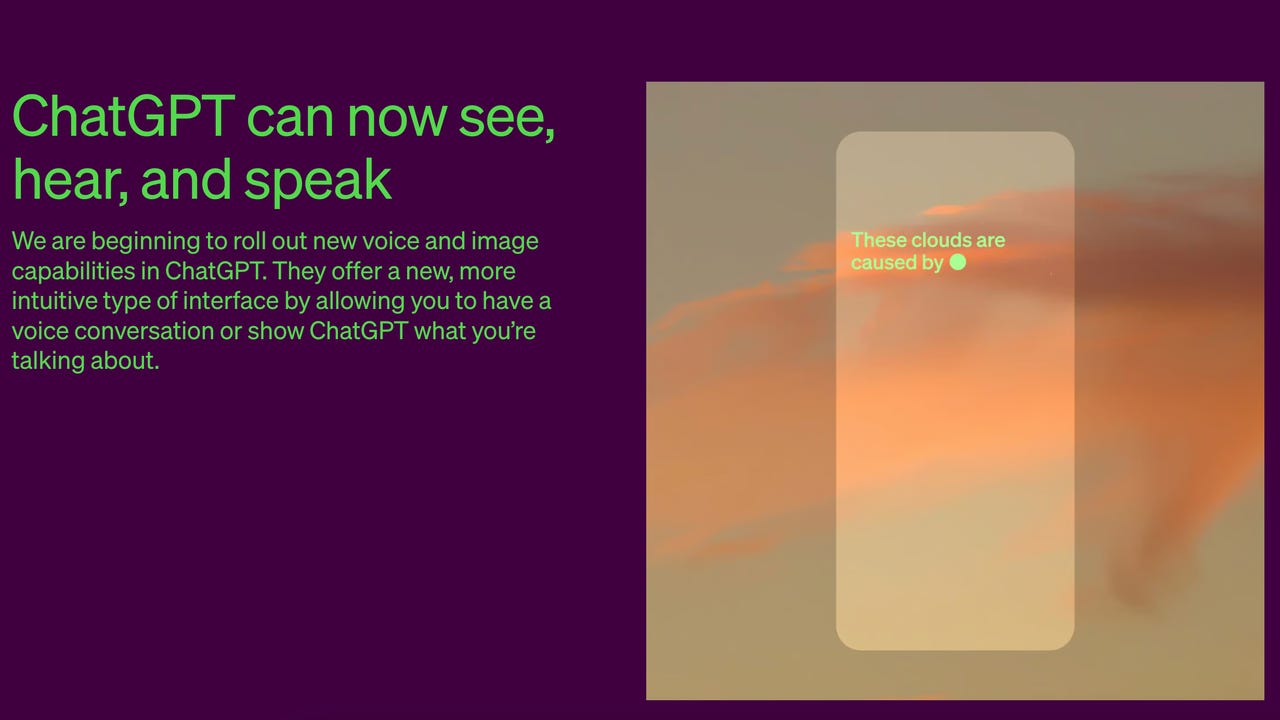

On Monday, OpenAI announced that ChatGPT could now "see, hear and speak," alluding to the popular chatbot's new abilities to receive both image and voice inputs and talk back in voice conversations.

Also:Amazon ups generative AI ante with$4B investment in Anthropic

The image input feature can be helpful in getting assistance with things you can see, such as solving a math problem on a worksheet, identifying the name of a plant, or looking at the items in your pantry and providing recipes.

In all of the above instances, all a user would have to do is snap a picture of what they are looking at and add the question they'd like an answer to. OpenAI discloses that the image understanding capability is powered by GPT-3.5 and GPT-4.

The voice input and output feature gives ChatGPT the same functionality as a voice assistant. Now, to ask ChatGPT for a task, all users have to do is use their voice, and once it has processed your request, it will verbally say its response back to you.

In the demo shared by OpenAI, a user verbally asks ChatGPT to tell a bedtime story about a hedgehog. ChatGPT responds by telling a story, similar to how voice assistants like Amazon's Alexa function.

Also:Why open source is the cradle of artificial intelligence

The race for AI-supported AI assistants is on, as just last week, Amazon announced it was supercharging Alexa with a new LLM that would give her ChatGPT-like capabilities, essentially making her a hands-free AI assistant. ChatGPT's voice integration into its platform accomplishes the same end result.

To support the voice feature, OpenAI uses Whisper, its speech recognition system, to transcribe a user's spoken words into text and a new text-to-speech model that can generate human-like audio from text with just a few seconds of speech.

To create all five of ChatGPT's voices that users can select from, the company collaborated with professional voice actors.

Both the voice and image features will be available for only ChatGPT Plus and Enterprise in the next two weeks. However, OpenAI says it will expand access to the feature for other users, such as developers, soon after.

Also: My two favorite ChatGPT Plus plugins and the remarkable things I can do with them

If you are a Plus or Enterprise user, to access the image input feature, all you have to do is tap the photo button in the chat interface and upload an image. To access the voice feature, head to Settings < New Features and opt into voice conversations.

Bing Chat, which is supported by GPT-4, supports image and voice inputs and is entirely free to use. So, if you want to test these features out but don't have access to them yet, Bing Chat is a good alternative.

Tags chauds:

Intelligence artificielle

Innovation et Innovation

Tags chauds:

Intelligence artificielle

Innovation et Innovation