Image: Google

Image: Google Google has opened up its AI Test Kitchen mobile app to give everyone some constrained hands-on experience with its latest advances in AI, like its conversational model LaMDA.

Google announced AI Test Kitchen in May, along with the second version of LaMDA (Language Model for Dialogue Applications), and is now letting the public test parts of what it believes is the future of human-computer interaction.

AI Test Kitchen is "meant to give you a sense of what it might be like to have LaMDA in your hands," Google CEO Sunday Pichai said at the time.

AI Test Kitchen is part of Google's plan to ensure its technology is developed with some safety rails. Anyone can join the waitlist for the AI Test Kitchen. Initially, it will be available to small groups in the US. The Android app is available now, while the iOS app is due "in the coming weeks".

SEE: Data scientist vs data engineer: How demand for these roles is changing

While signing up, the user needs to agree to a few things, including "I will not include any personal information about myself or others in my interactions with these demos".

Similar to Meta's recent public preview of its chatbot AI model, BlenderBot 3, Google also warns that its early previews of LaMDA "may display inaccurate or inappropriate content". Meta warned as it opened up BlenderBot 3 to US residents that the chatbot may 'forget' that it's a bot and may "say things we are not proud of".

The two companies are acknowledging that their AI may occasionally come across as politically incorrect, as Microsoft's Tay chatbot did in 2016 after the public fed it with it nasty inputs. And like Meta, Google says LaMDA has undergone "key safety improvements" to avoid it giving inaccurate and offensive responses.

But unlike Meta, Google appears to be taking a more constrained approach, putting boundaries on how the public can communicate with it. So far, Google has only exposed LaMDA to Googlers. Opening it up to the public may allow Google to accelerate the pace of answer quality improvement.

Google is releasing AI Test Kitchen as a set of demos. The first, 'Imagine it' lets you name a place, whereupon the AI offers paths to "explore your imagination".

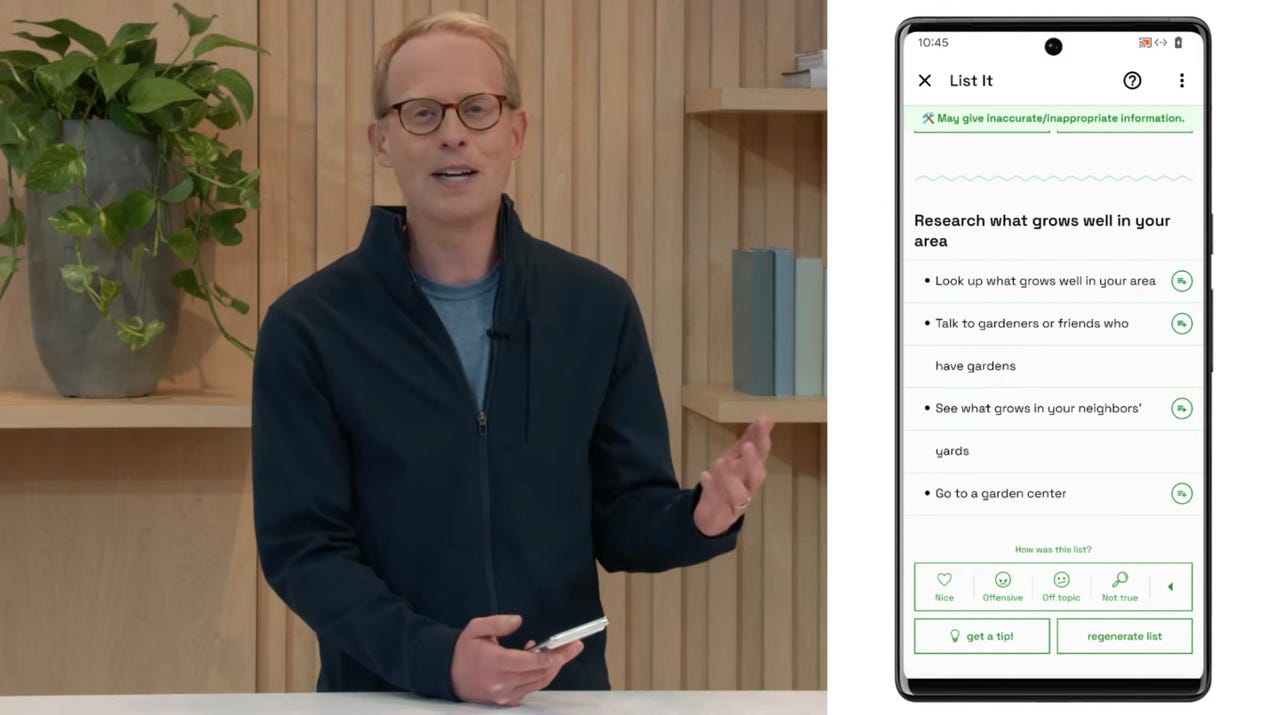

The second 'List it' demo allows you to 'share a goal or topic' that LaMDA then attempts to break down into a list of helpful subtasks.

The third demo is 'Talk about it (Dogs edition)', which appears to be the most free-ranging test -- albeit constrained to canine matters: "You can have a fun, open-ended conversation about dogsand only dogs, which explores LaMDA's ability to stay on topic even if you try to veer off-topic," says Google.

LaMDA and BlenderBot 3 are pursuing the best performance in language models that simulate dialogue between a computer and humans.

LaMDA is a 137-billion parameter large language model, while Meta's BlenderBot 3 is a 175-billion parameter "dialogue model capable of open-domain conversation with access to the internet and a long-term memory".

Google's internal tests have been focussed on improving the safety of the AI. Google says it has been running adversarial testing to find new flaws in the model and recruited 'red team' -- attack experts who can stress the model in a way the unconstrained public might -- who have "uncovered additional harmful, yet subtle, outputs," according to Tris Warkentin of Google Research and Josh Woodward of Labs at Google.

While Google wants to ensure safety and avoid its AI saying shameful things, Google can also benefit from putting it out in the wild to experience human speech that it can't predict. Quite the dilemma. In contrast to one Google engineer who questioned whether LaMDA was sentient, Google emphasises several limitations that Microsoft's Tay suffered from when exposed to the public.

"The model can misunderstand the intent behind identity terms and sometimes fails to produce a response when they're used because it has difficulty differentiating between benign and adversarial prompts. It can also produce harmful or toxic responses based on biases in its training data, generating responses that stereotype and misrepresent people based on their gender or cultural background. These areas and more continue to be under active research," say Warkentin and Woodward.

SEE: How I revived three ancient computers with ChromeOS Flex

Google says the protections it has added so far have made its AI safer, but have not eliminated the risks. Protections include filtering out words or phrases that violate its policies, which "prohibit users from knowingly generating content that is sexually explicit; hateful or offensive; violent, dangerous, or illegal; or divulges personal information."

Also, users shouldn't expect Google to delete whatever is said on completing the LaMDA demo.

"I will be able to delete my data while using a particular demo, but once I close out of the demo, my data will be stored in a way where Google cannot tell who provided it and can no longer fulfill any deletion requests," the consent form states.

Tags chauds:

Intelligence artificielle

Innovation et Innovation

Tags chauds:

Intelligence artificielle

Innovation et Innovation