Scientists are finding that more can be achieved by removing three-quarters of a neural net.

IST AustriaA major pursuit in the science of artificial intelligence (AI) is the balance between how big a program is and how much data it uses. After all, it costs real money, tens of millions of dollars, to buy Nvidia GPU chips to run AI, and to gather billions of bytes of data to train neural networks -- and how much you need is a question with very practical implications.

Google's DeepMind unit last year codified the exact balance between computing power and training data as a kind of law of AI. That rule of thumb, which has come to be called "The Chinchilla Law", says you can reduce the size of a program to just a quarter of its initial size if you also increase the amount of data it's trained on by four times the initial size.

Also: Can generative AI solve computer science's greatest unsolved problem?

The point of Chinchilla, and it's an important one, is that programs can achieve an optimal result in terms of accuracy while being less gigantic. Build smaller programs, but train for longer on the data, says Chinchilla. Less is more, in other words, in deep-learning AI, for reasons not yet entirely understood.

In a paper published this month, DeepMind and its collaborators build upon that insight by suggesting it's possible to do even better by stripping away whole parts of the neural network, pushing performance further once a neural net has hit a wall.

Also: Generative AI will far surpass what ChatGPT can do. Here's why

According to lead author Elias Frantar of Austria's Institute of Science and Technology, and collaborators at DeepMind, you can get the same results in term of accuracy from a neural network that's half the size of another if you employ a technique called "sparsity".

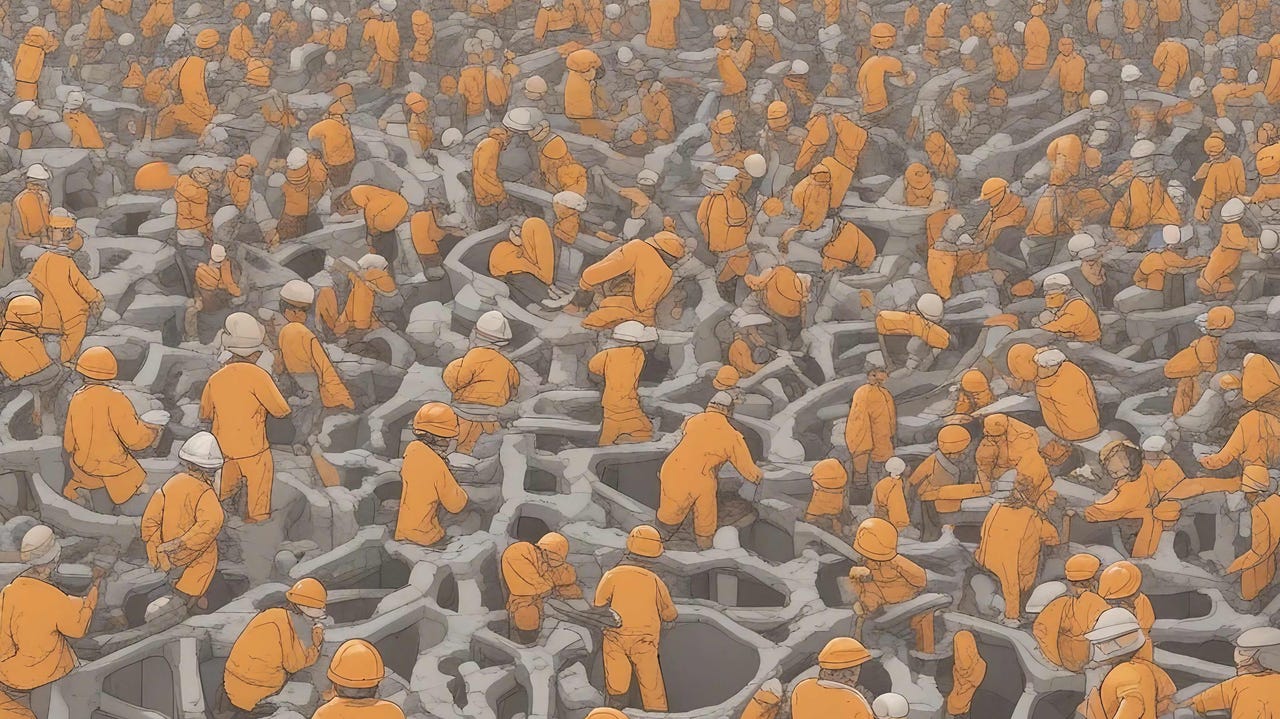

Sparsity, an obscure element of neural networks that has been studied for years, is a technique that borrows from the actual structure of human neurons. Sparsity refers to turning off some of the connections between neurons. In human brains, these connections are known as synapses.

The vast majority of human synapses don't connect. As scientist Torsten Hoefler and team at the ETH Zurich observed in 2021, "Biological brains, especially the human brain, are hierarchical, sparse, and recurrent structures," adding, "the more neurons a brain has, the sparser it gets."

The thinking goes that if you could approximate that natural phenomenon of the very small number of connections, you could do a lot more with any neural net with a lot less effort -- and a lot less time, money, and energy.

Also: Microsoft, TikTok give generative AI a sort of memory

In an artificial neural network, such as a deep-learning AI model, the equivalent of synaptic connections are "weights" or "parameters". Synapses that don't have connections would be weights that have zero values -- they don't compute anything, so they don't take up any computing energy. AI scientists refer to sparsity, therefore, aszeroing-outthe parameters of a neural net.

In the new DeepMind paper, posted on the arXiv pre-print server, Frantar and team ask, if smaller networks can equal the work of larger networks, as the prior study showed, how much can sparsity help push performance even further by removing some weights?

The researchers discover that if you zero out three-quarters of the parameters of a neural net -- making it more sparse -- it can do the same work as a neural net over two times its size.

As they put it: "The key take-away from these results is that as one trains significantly longer than Chinchilla (dense compute optimal), more and more sparse models start to become optimal in terms of loss for the same number of non-zero parameters." The term "dense compute model" refers to a neural net that has no sparsity, so that all its synapses are operating.

"This is because the gains of further training dense models start to slow down significantly at some point, allowing sparse models to overtake them." In other words, normal, non-sparse models -- dense models -- start to break down where sparse versions take over.

Also:We will see a completely new type of computer, says AI pioneer Geoff Hinton

The practical implication of this research is striking. When a neural network starts to reach its limit in terms of performance, actuallyreducingthe amount of its neural parameters that function -- zeroing them out -- will extend the neural net's performance further as you train the neural net for a longer and longer time.

"Optimal sparsity levels continuously increase with longer training," write Frantar and team. "Sparsity thus provides a means to further improve model performance for a fixed final parameter cost."

For a world worried about the energy cost of increasingly power-hungry neural nets, the good news is that scientists are finding even more can be done with less.

Tags chauds:

Intelligence artificielle

Innovation et Innovation

Tags chauds:

Intelligence artificielle

Innovation et Innovation